Integer Linear Programming in OpenGM

Today I will be discussing some of the ways in which inference algorithms especially the Linear Programming ones work in OpenGM.

So there are many ways to solve an energy minimization problem.

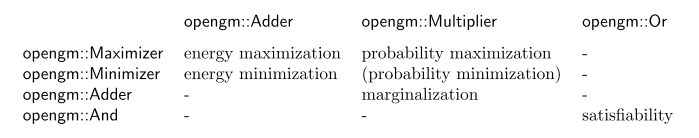

From the table below we can get an idea about the different accumulative operations used to do inference:

That is, if we need to solve for energy minimization problem, we have to go for a combination of opengm::Adder and opengm::Minimizer in OpenGM to bring the same effect.

Along with this, we have to choose one Inference algorithm. From OpenGM we get the list of available inference algorithms as below:

- Message Passing

- Belief Propogation

- TRBP

- TRW-S

- Dynamic Programming

- Dual Decomposition

- Using Sub-gradient Methods

- Using Bundle Methods

- A* Search

- (Integer) Linear Programming

- Graph Cuts

- QPBO

- Move Making

- Expansion

- Swap

- Iterative Conditional Modes(ICM)

- Lazy Flipper

- LOC

- Sampling

- Gibbs

- Swendsen-Wang

- Wrappers around other libraries

- libDAI

- MRF-LIB

- BruteForce Search

We will be concentrating here on the ILP Relaxation problem. Lets see how it goes!

Any energy minimization problem can be formulated as an (integer) linear problem. We can also sufficiently solve the problem by considering a relaxation of the original problem by relaxing the system of imequlities.

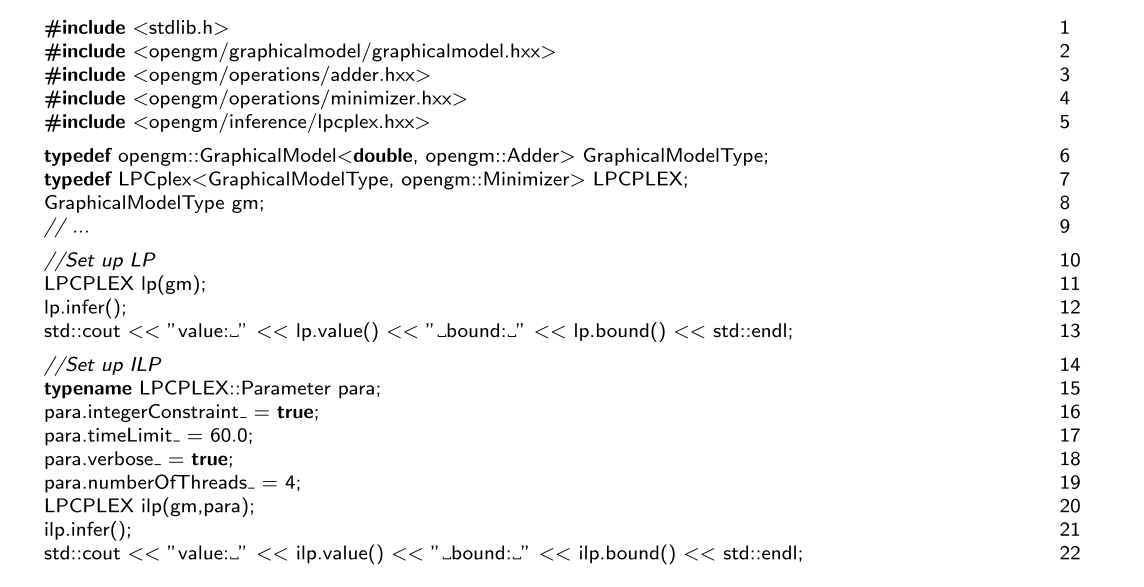

The corresponding parameter class can take many optional parameters which can be used to tune the solver. The most important are the flags

- to switch to integer mode integerConstraint_

- the number of threads that should be used numberOfThreads_

- the verbose flag verbose_ that switches the CPLEX output on

- the timeLimit_ that stops the method after N seconds realtime

A sample is shown below: